Lecture 6 Outliers and Influential Points

In the previous lecture we looked at diagnostic tools for linear regression models.

We saw that a model can appear inappropriate for the data because of one or two odd points

In this lecture we will examine the issue of odd points in more depth.

6.1 What Exactly is an Outlier?

There is no hard and fast definition.

Essentially, an outlier is defined as a data point that emanates from a different model than do the rest of the data.

Notice that this makes the definition dependent on the model in question.

6.2 Detecting Outliers

Outliers may be detected by:

- Inspection of a scatterplot of the data.

- Inspection of a fitted line plot (where fitted regression line is superimposed on scatterplot).

- Plotting residuals versus fitted values or covariate.

Any data point that lies an unusual distance from the fitted line, or has a large residual in comparison to the other data points, may be considered an outlier.

6.3 Outliers for the Hill Racing Data

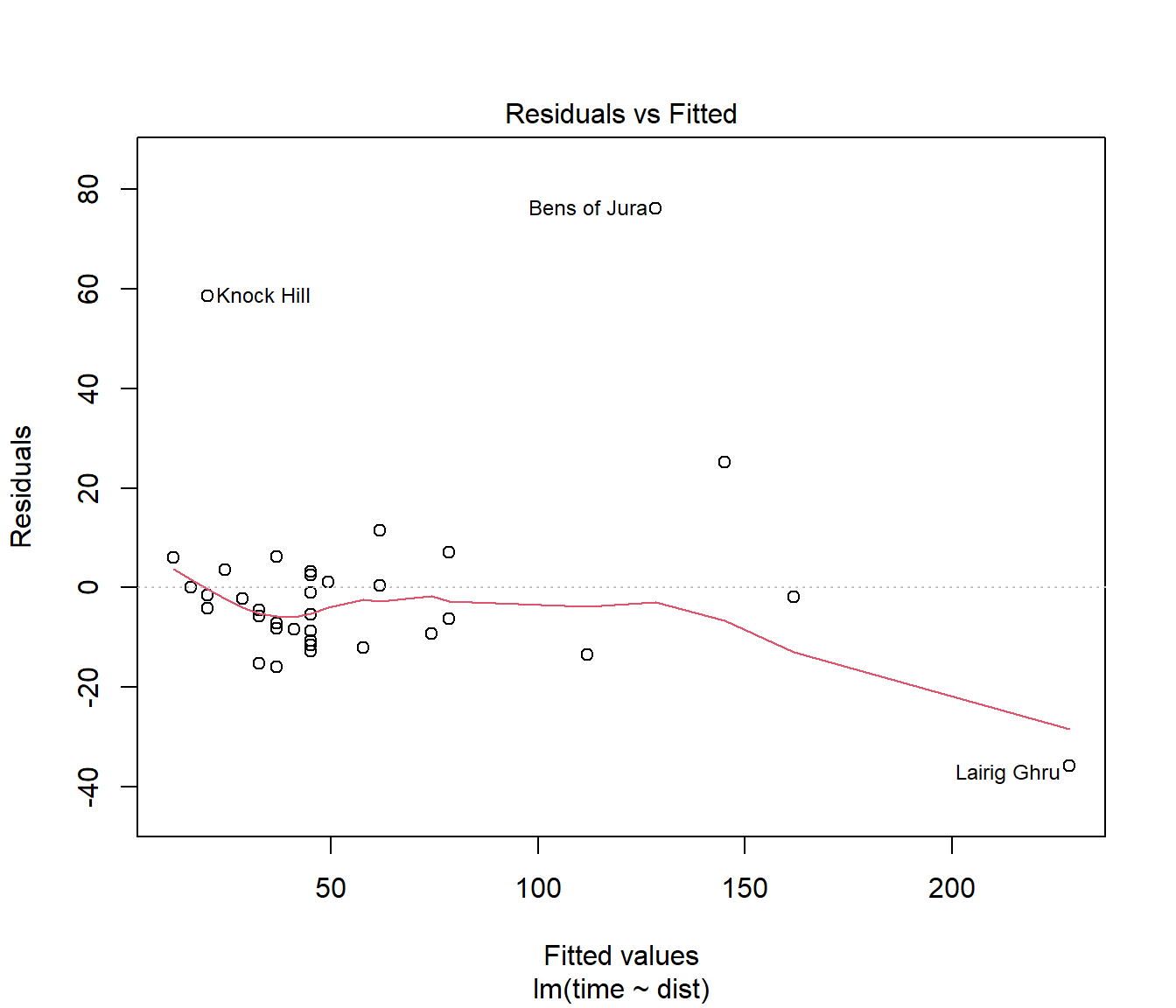

(#fig:Hills.lmDiag1)Residuals v fitted plot for Hills data

Inspection of the plot of residuals versus fitted values suggests three possible outliers: Knock Hill, Bens of Jura, and Lairig Ghru. Most extreme is Bens of Jura.

The Knock Hill outlier may be due to transcription error — time of 18.65 recorded as 78.65 minutes. See the help page for this data.

6.4 What to Do When You Find Outliers

Do not ignore them!

First, investigate whether data have been mis-recorded (go back to data source if possible).

If an outlier is not due to transcription errors, then it may need removing before refitting the model.

In general one should refit the model after removing even a single outlier, because removal of one outlier can alter the fitted model, and hence make other points appear less (or more) inconsistent with the model.

Other approaches covered in this course are to use a transformation to reduce its impact, or use weighted regression to reduce its impact.

The field of robust statistics (not covered in this course) is concerned with more sophisticated ways of dealing with outliers.

6.5 Influence

6.5.1 Leverage

The extent of problems caused by an outlier depends on the amount of influence that this data point has on the fitted model.

The potential influence of a data point can be measured by its leverage. For the ith data point in a simple linear regression, leverage is given by

\[h_{ii} = \frac{1}{n} + \frac{(x_i - \bar x)^2}{s_{xx}}\]

Notice that:

- leverage depends only on the x-value, so that the actual influence of a data point will not depend on the response value;

- data points with extreme x-values (i.e. far from \(\bar{x}\)) have greatest leverage.

As an aside, the sum of the leverages adds to the number of parameters fitted in a model, so the average leverage will be p/n.

6.5.2 Cook’s Distance

The actual influence of a data point can be measured by calculating the change in parameter estimates between

the model built on all the data, and

the model built on data with the data point removed.

Cook’s distance is a measure of influence that is based on exactly this kind of approach.

Cook’s distance depends on both the x and y-values for a data point, and will be large for data points with both high leverage and large standardized residual.

A value of Cook’s distance greater than one is a cause for concern.

6.6 Using plots to detect influence

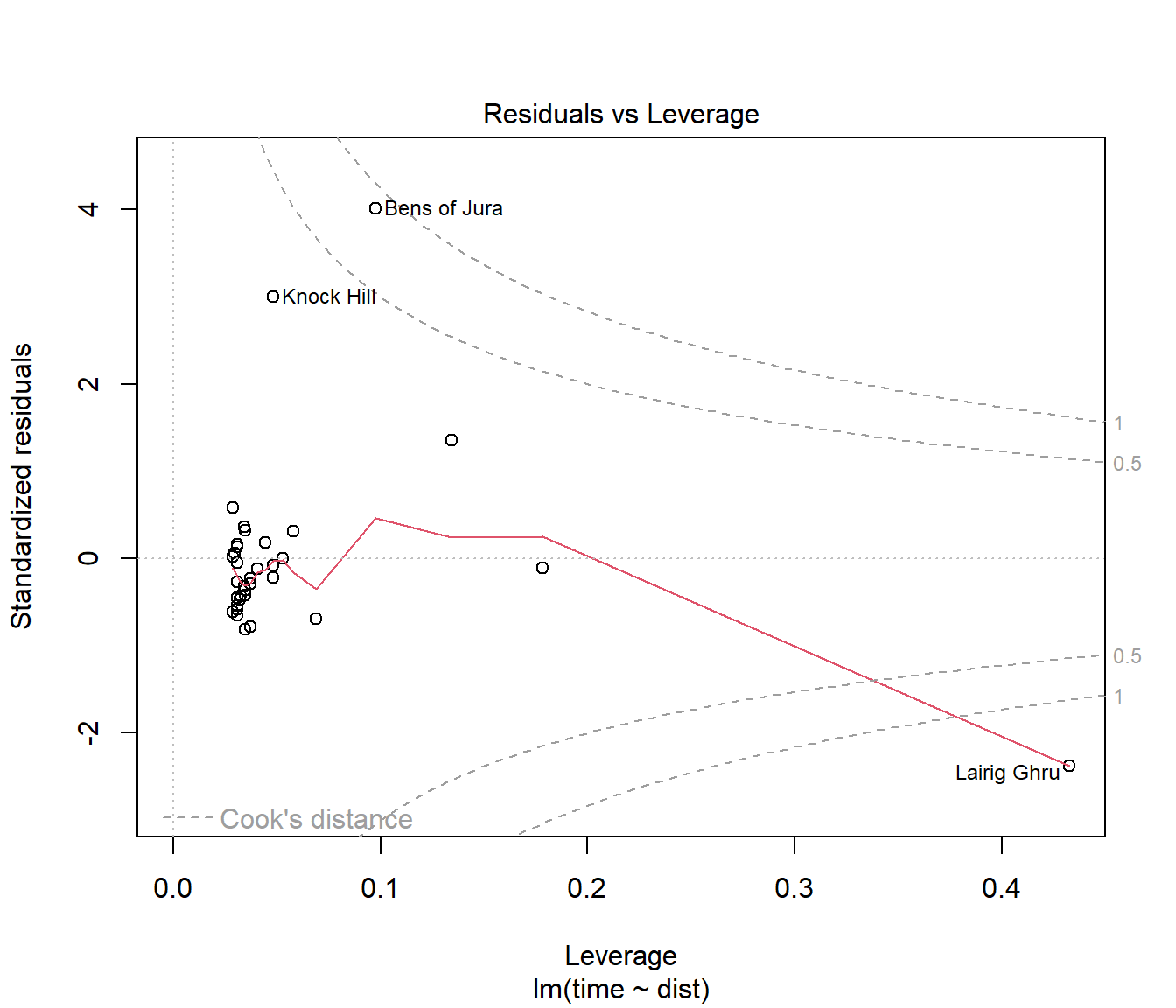

The fourth plot produced in R by plot() applied to a linear model is a plot of standardized residuals against leverage, with contours marking extreme Cook’s distance.

(#fig:Hills.lmDiag4)Fourth diagnostic plot for Hills.lm

For the hill racing data, Lairig Ghru and Bens of Jura have much greater influence than other data points.

N.B. The names of observations appear here because the data is stored with rownames. How we import data is important.

You should observe that the which argument is not set to 4 as you may have expected. There are actually 6 plots available but the vast majority of need is served with plots 1,2,3, and 5.

6.7 Conclusions on the Hill Racing Data

The Scottish Hill Racing Data appears to contain some outliers.

Some of these outliers are data points with high leverage, and hence may have a marked effect on the fitted model.

Recall that the definition of an outlier will depend on the model that we choose to fit.

Perhaps the outliers only appear as odd points because the model is inadequate.

For example, it may be the variable climb has an important effect on race time, and should be included in the model.

6.8 An Exercise

Remove one of the points flagged as unusual in the above analyses. Then re-fit the model to the reduced data. Compare the fit of the two models by plotting their fitted lines on the scatter plot of the data.

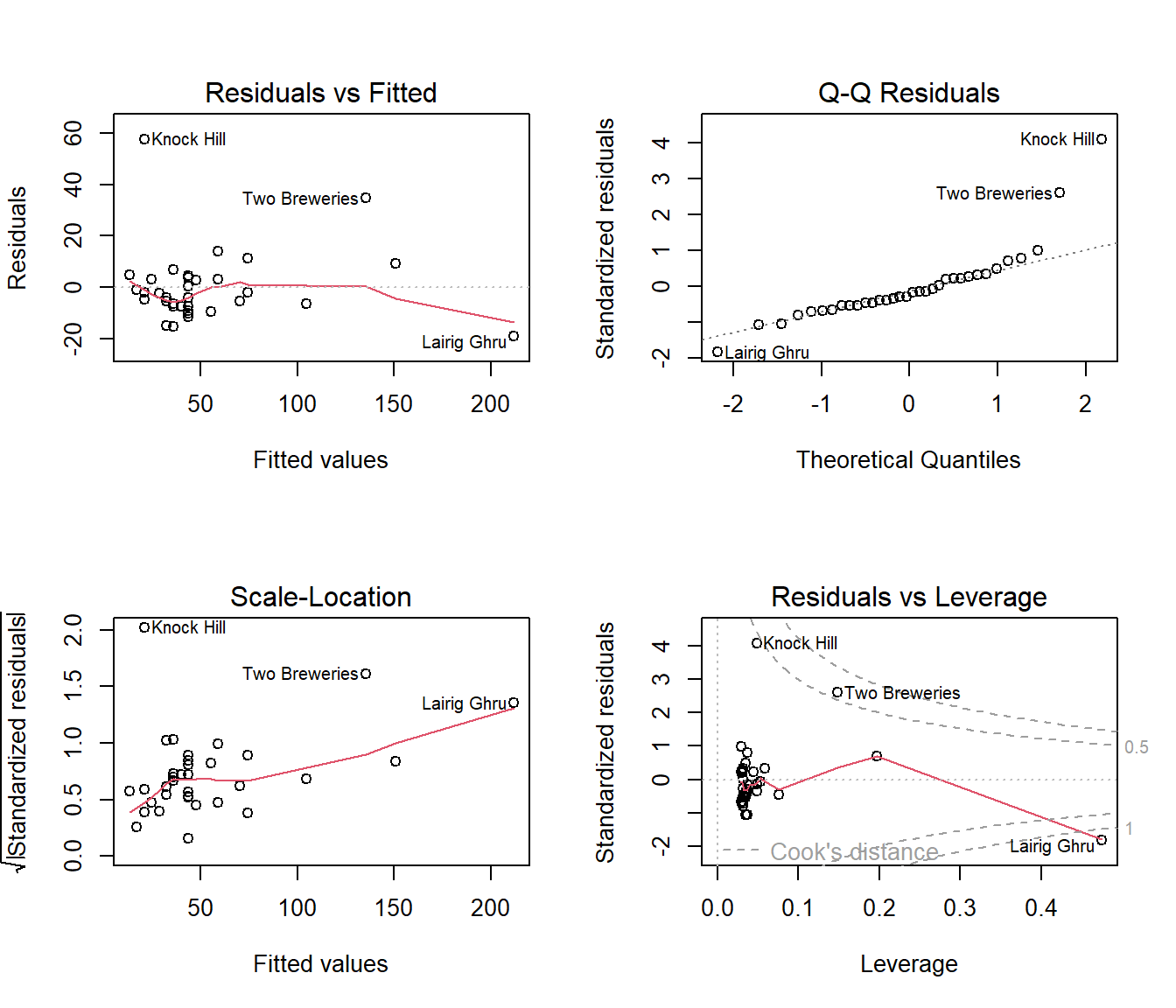

Look at the residual analysis for your new model. Does removal of the point you chose affect the status of the other points?

6.8.1 A Solution

The following code picks out the row for the maximum residual, and then recomputes the linear model with that removed. To be explicit, the code:

- make a row names variable so we can refer to them explicitly using other

tidyversecommands. N.B. The standard rownames are an attribute so don’t work so nicely for thefilter()command which operates on columns. filter()out the one we don’t want- then revert to having rownames so that our graphs have labelled points

- fit the same model using the reduced dataset.

library(tidyverse) # for data manipulation

library(broom) # going to sweep up a bunch of useful stuff...

augment(Hills.lm)# A tibble: 35 × 9

.rownames time dist .fitted .resid .hat .sigma .cooksd .std.resid

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Greenmantle 16.1 2.5 16.0 0.0976 0.0529 20.3 7.06e-7 0.00502

2 Carnethy 48.4 6 45.1 3.21 0.0308 20.3 4.24e-4 0.163

3 Craig Dunain 33.6 6 45.1 -11.5 0.0308 20.2 5.44e-3 -0.585

4 Ben Rha 45.6 7.5 57.6 -12.0 0.0286 20.1 5.51e-3 -0.612

5 Ben Lomond 62.3 8 61.8 0.464 0.0288 20.3 8.25e-6 0.0236

6 Goatfell 73.2 8 61.8 11.4 0.0288 20.2 4.99e-3 0.580

7 Bens of Jura 205. 16 128. 76.2 0.0977 14.5 8.75e-1 4.02

8 Cairnpapple 36.4 6 45.1 -8.78 0.0308 20.2 3.17e-3 -0.447

9 Scolty 29.8 5 36.8 -7.06 0.0347 20.2 2.33e-3 -0.360

10 Traprain 39.8 6 45.1 -5.39 0.0308 20.2 1.20e-3 -0.274

# ℹ 25 more rowsHills2 = Hills |>

rownames_to_column() |>

# so we can use them explicitly like a variable

filter(rowname != "Bens of Jura") |>

# filter out the one we don't want

column_to_rownames()

# go back to having rownames so that our graphs have labelled points

Hills2.lm <- lm(time ~ dist, data = Hills2)

augment(Hills2.lm)# A tibble: 34 × 9

.rownames time dist .fitted .resid .hat .sigma .cooksd .std.resid

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Greenmantle 16.1 2.5 17.0 -0.957 0.0531 14.7 0.000129 -0.0679

2 Carnethy 48.4 6 43.8 4.57 0.0311 14.7 0.00165 0.320

3 Craig Dunain 33.6 6 43.8 -10.1 0.0311 14.6 0.00811 -0.711

4 Ben Rha 45.6 7.5 55.2 -9.65 0.0295 14.6 0.00694 -0.676

5 Ben Lomond 62.3 8 59.1 3.20 0.0300 14.7 0.000778 0.224

6 Goatfell 73.2 8 59.1 14.2 0.0300 14.5 0.0152 0.992

7 Cairnpapple 36.4 6 43.8 -7.42 0.0311 14.7 0.00435 -0.520

8 Scolty 29.8 5 36.1 -6.39 0.0348 14.7 0.00364 -0.449

9 Traprain 39.8 6 43.8 -4.03 0.0311 14.7 0.00129 -0.283

10 Lairig Ghru 193. 28 212. -19.2 0.475 13.9 1.52 -1.83

# ℹ 24 more rowsN.B. The augment() command added columns starting with a period containing several of the values we want for checking assumptions; other added columns will only be explained if they are needed in future. Watch that the first column though is called .rownames but we use the filter() command on a data set, not the results of augment()

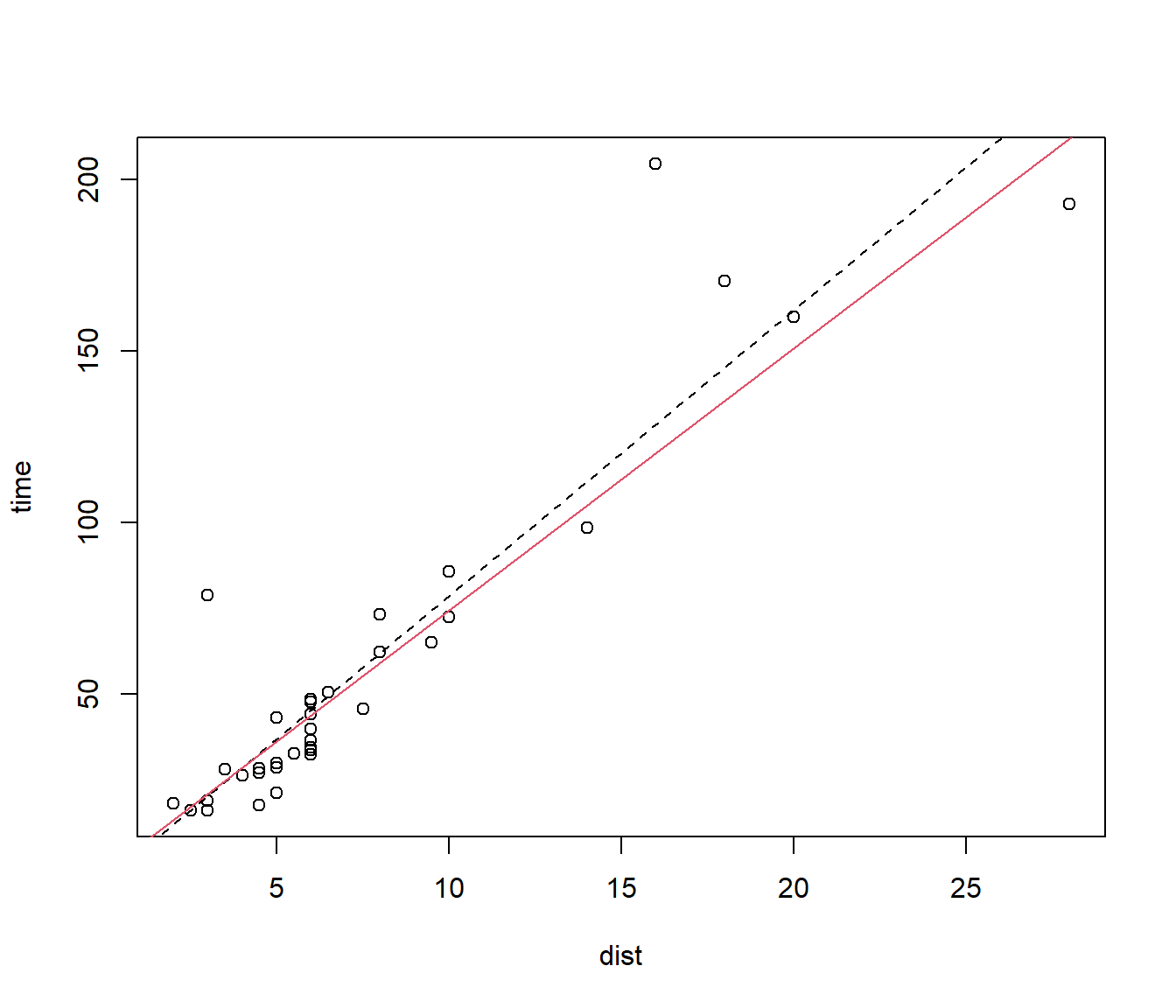

Figure 6.1: Models fitted to Hills data with and without the Bens of Jura race.

N.B. this form of plot() and abline() is old-fashioned, but still good value

(#fig:residsHills2.lm)Diagnostic plots for Hills2.lm

6.9 Deleted residuals

Suppose we think an outlier is dragging the line towards itself, to some extent hiding how unusual it is.

Suppose we were to delete the \(i^{th}\) data point and recalculate the line with it gone. \[ \hat y_{ -i} = \hat\beta_{0,-i} + \hat\beta_{1,-i} \,\, x \] for \(j=1,2,...,n\), \(j\ne i\). The \(-i\) notation means with the \(i\) point removed.

Since we can calculate this “deleted line” at any x, we can do it at the value of \(x_i\).

A deleted residual is \[ e_{i, -i} = y_i - \hat y_{i,-i}\]

We calculate these deleted residuals for each row \(i\) in turn.

We standardise each deleted residual using the variance calculated with the \(i^{th}\) point removed. What we get are known as studentized deleted residuals.

The R function rstudent() calculates the deleted residuals for us.

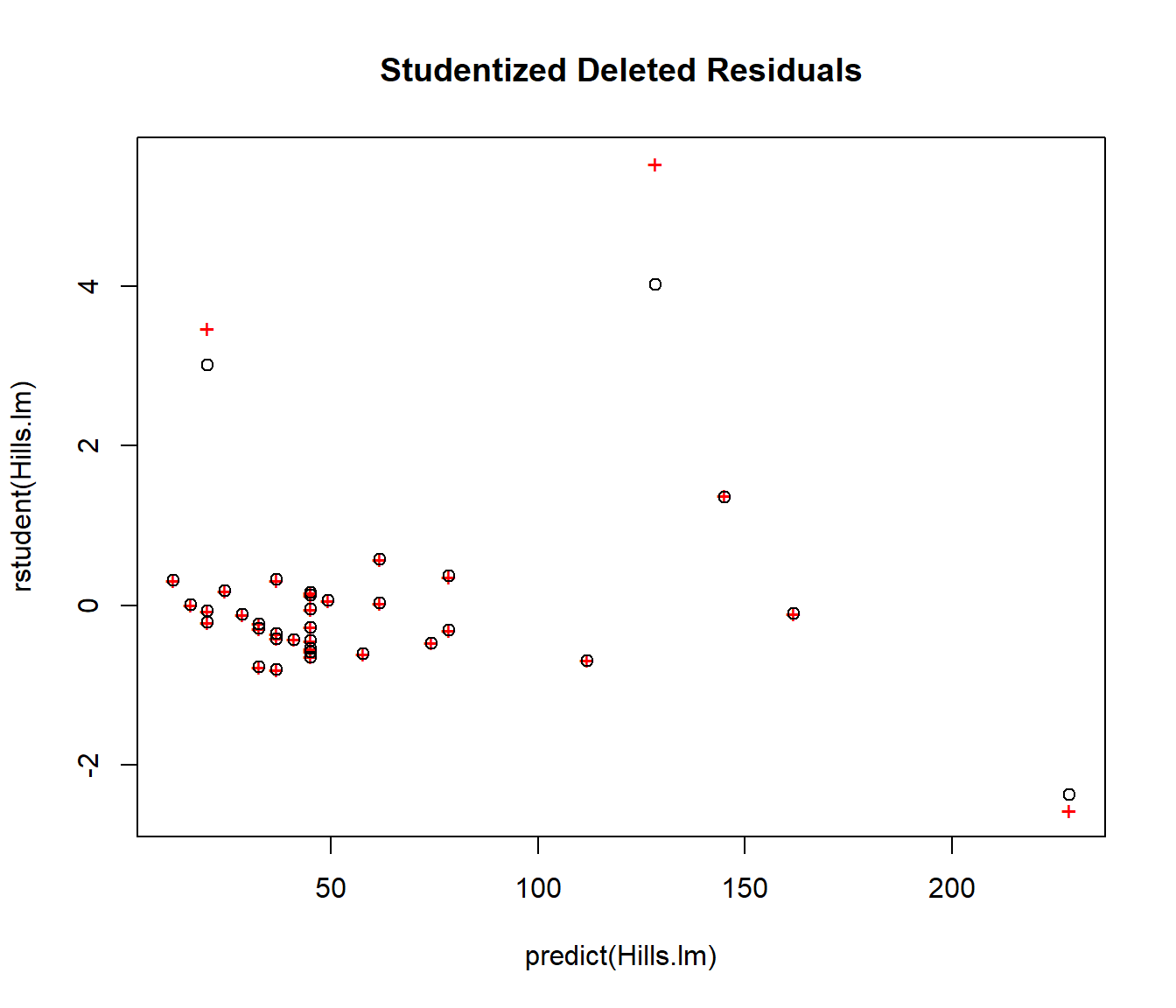

It is often helpful to plot them on the same graph as standardized residuals. If all the points overlap then no data values are influential. But the graph below immediately shows us that deleting the two outliers in the left-middle of the plot would have a big effect, so they are influential. However deleting the point on the right would not change the model much, so it should be left alone.

plot(rstudent(Hills.lm) ~ predict(Hills.lm), col = "red", pch = "+", main = "Studentized Deleted Residuals")

points(rstandard(Hills.lm) ~ predict(Hills.lm), col = "black", pch = 1)

[1] 5.53731[1] 4.018411